VoC Expert Insights: Kayla Eliaza from Notion

At our first virtual meetup last week, we sat down with Kayla Eliaza from Notion, a seasoned Voice of Customer pro with nearly a decade of experience building and shaping VoC programs at companies like Asana and Notion, tackled gnarly feedback challenges, and knows what works (and what doesn’t).

Here are some of the highlights from the conversation—no fluff, just the good stuff.

What’s the big deal about VoC?

A solid VoC program isn’t just “nice to have.” It’s how companies:

- Get crystal-clear on what customers actually want.

- Cut through the noise of feedback chaos.

- Build products that make people say, “Finally, they get me!”

But building a VoC program? It’s not for the faint of heart. Kayla shared how to get it off the ground without losing your mind.

Getting Started: Walk Before You Run

“Don’t try to capture feedback from every single team right away. Start small—one or two teams—and focus on making the process clear and actionable.”

Most people want to do everything at once when starting a VoC program. Bad idea. Kayla’s advice? Start small.

- Talk to Customer-Facing Teams:

These folks know what customers want. Start there. - Focus on Product’s Needs:

If your insights aren’t useful to the product team, your program won’t get far. - Secure an Advocate:

Find someone in leadership or product who can champion the program. An early win (like building a feature based on VoC insights) builds credibility fast.

How Do You Know It’s Working?

“There’s no one-size-fits-all metric for VoC. It all depends on what your goals are—whether it’s driving more feature requests, better ones, or improving customer satisfaction.”

Spoiler: There’s no magical VoC success metric. But here’s how Kayla approaches it:

- Define your goals. Are you aiming for better feature requests? Higher customer satisfaction? More engagement?

- Track the right support metrics, like response times, satisfaction scores, and ticket volumes.

- Segment insights by customer tier, ARR, or other categories—but don’t overvalue “current” ARR. Potential future ARR matters too.

Pro tip from Kayla: Start small, prove value, and scale up.

“I think less about metrics to measure the success of the program and more about the metrics that help build the program’s case—things like customer satisfaction ratings, ticket response times, and where feature requests are coming from.”

Biggest VoC challenges—and how to beat them

Kayla doesn’t sugarcoat it: VoC is hard. The toughest part? Getting buy-in.

“The hardest part of building a VoC program is proving your worth—how do you get people to believe in the value of the insights you’re bringing?”

Here’s how to win people over:

- Build strong relationships with product and leadership.

- Understand what really matters to product teams—hint: it’s not just feature requests; it’s the problems behind them.

- Secure quick wins to show value fast.

Key VoC trends to watch

Kayla pointed to two major shifts happening in VoC:

- VoC Programs Are on the Rise.

More companies are making VoC a priority—and it’s about time. - AI Is Changing Everything.

From spotting trends to tracking feedback over time, AI tools are helping teams get ahead.

One of Kayla's hot takes:

“There’s value in recency bias for VoC. Knowing what customers are saying right now is important, but being able to track how trends change over time is equally valuable.”

Advice from the Q&A

Our audience had some awesome questions.

Q: How do you stop other teams from co-opting the term “Voice of Customer” (VoC) for general feedback?

Kayla’s Answer: You don’t need to stop them. Instead, focus on alignment. Talk to other teams to define consistent metrics and processes, such as tracking customer email, ARR, and location. This ensures everyone understands and uses VoC data consistently.

Q: How do you drive accountability for VoC insights and ensure they lead to action?

Kayla’s Answer: Build strong relationships with product teams and secure an advocate in leadership. An early win—such as a successfully launched feature based on VoC insights—demonstrates value and drives future action. Avoid strong-arming; instead, encourage collaboration and enthusiasm for the program.

Q: How do you collect and analyze feedback?

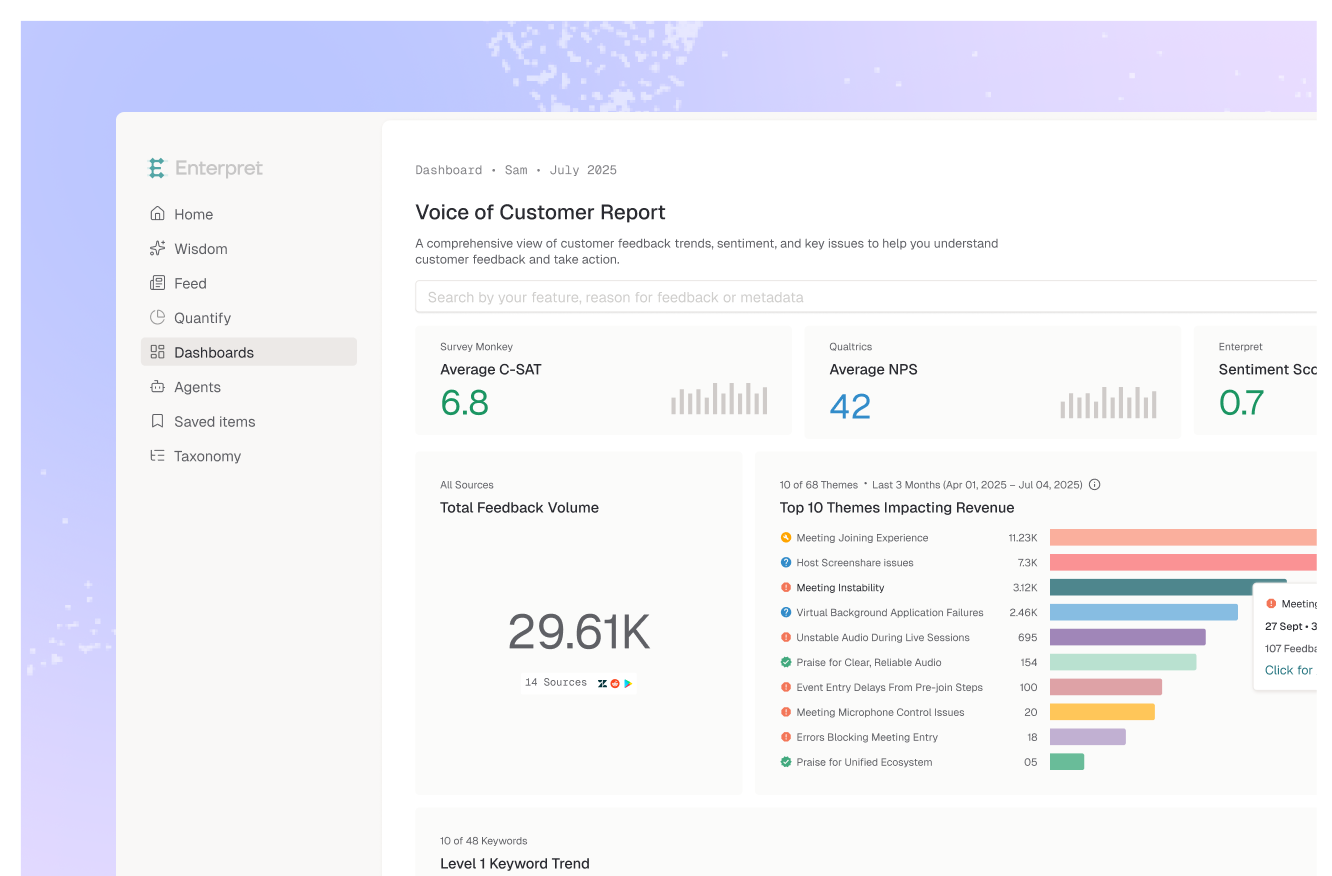

Kayla’s Answer:Use tools like Enterpret for AI-driven analysis and Zendesk for ticketing. Pair automation with manual tagging for higher accuracy. In Zendesk, categorize feedback into key types like bug reports, feature requests, and administrative actions. This structure ensures insights are actionable.

Q: Which team is best positioned to own a VoC program?

Kayla’s Answer: Customer-facing teams (like support or success) should own VoC because they’re closest to customers. Product teams should act as stakeholders, not owners, to avoid biasing feedback to align with their pre-existing goals.

Q: Have you found tools that automate tagging effectively?

Kayla’s Answer: Automated tools exist, but they aren’t perfect—most have an accuracy rate around 60–65%. Manual tagging still delivers higher accuracy (80%+), though it’s time-intensive. A hybrid approach can strike a good balance.

Q: Do you provide differentiated paths for serving first-year users?

Kayla’s Answer: Not currently, but it's a great idea. At Asana, the focus was on the first 30 days to address early pain points, such as account setup or onboarding challenges. The key is ensuring your tooling can segment users by sign-up date or account age.

Q: How are monthly and quarterly VoC reports shared, and what are the expectations?

Kayla’s Answer: Reports are shared via tools like Notion or Slack, but direct communication with teams (e.g., product or support) works best. Reports highlight key themes, possible solutions, and actionable insights. Giving product teams a heads-up before sharing broader recommendations ensures alignment.

Q: How do you recruit customers for further research (interviews, surveys, focus groups)?

Kayla’s Answer: Partner with user research teams or use macros in support tools to follow up on feature requests. Macros can ask customers to provide more details or opt-in for follow-up, creating a pool of engaged users for future research.

Q: How do you weigh feedback from different customer segments, such as enterprise vs. free users?

Kayla’s Answer: Segment feedback by user type (e.g., free, paid, enterprise) but avoid overvaluing current ARR. A free user today could represent significant future potential. Highlight trends for each segment and let product and business teams decide prioritization.

Q: Do manual tags provide better accuracy than automated tagging?

Kayla’s Answer: Yes, manual tagging typically achieves higher accuracy (around 80–90%) compared to automation (60–65%). However, it’s time-intensive and depends on agents maintaining consistent tagging practices, which can be challenging in high-volume environments.

Q: What prompted VoC programs at your companies?

Kayla’s Answer: At Notion, VoC was already in place before Kayla joined. At Asana, the business operations team initiated it. VoC often starts grassroots-style, with customer-facing teams eager to share feedback, but securing resources and buy-in for a formal program requires advocacy and proof of value.

Q: Which departments and roles are most involved in using Enterpret and VoC programs?

Kayla’s Answer: Primarily product teams, engineering, and product specialists (a hybrid role between product and support). Enterpret is made accessible to these teams, with self-service templates enabling them to generate their own reports. Collaboration is key for scalability.

Want More? Let’s Keep the Conversation Going.

We’re building a VoC community where people like Kayla (and you!) can connect, share ideas, and swap stories. Want in?

Until next time—keep listening, learning, and building what customers actually want.